Machine Learning FAQ

A list of questions about Machine Learning

I have been reading the book “Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow” recently and found the first chapter very insightful. It captures most of the conversations that is scattered around this space into a cohesive chapter. At the end of that chapter, there is a list of questions that are very straight forward and natural questions to ask in order for someone to understand more about Machine Learning. So this is my attempt to answer then and I am going to keep it very short!

The Machine Learning FAQ

How would you define Machine Learning?

Basically it’s a Machines that takes and input and then tells you an output. Yes, it wasn’t garunteed to be correct though.

Can you name four types of problems where it shines?

Image Regonition, Natual Lauguage Processing (NLP), Personalized Recommendations and playing the game called Go.

What is a labeled training set?

Label means the correct identifer of that piece of data, aka the gound truth. Training set means it’s a dedicated set of data that is for training the algorithm that will eventually output a model. That model will then take an input and throw a output as a prediction.

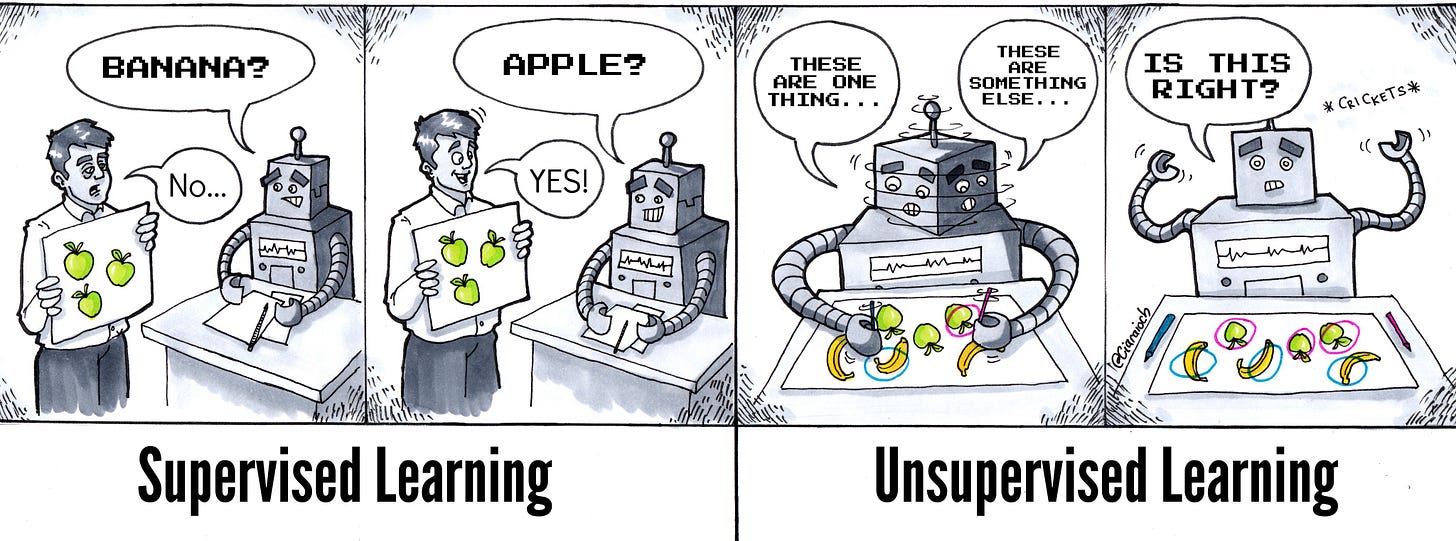

What are the two most common supervised tasks?

Classifications and a usually numeric prediction.

Can you name four common unsupervised tasks?

Clustering, dimensionality reduction, anomaly detection and association rule learning… which is worth explain a bit more in detail here:

Clustering is basically grouping the data into certain groups that might be hidden in the data, so there are no correct answer to start with.

Dimensionality reduction is to reduce the number of features of a data point so that the data point has less dimention and hence less complicated (e.g. if height and weight are chainging very similarly when the target variable changes, the two can probably be combined into one feature without losing too much information)

Anomaly detection is quite self explanatory, things like fraud transaction or machine faliure, etc.

Association rule learning is whether one thing has a relationship with another. The classic beer and diaper example is one of them.

What type of Machine Learning algorithm would you use to allow a robot to walk in various unknown terrains?

Walk in various unknown terrains is quite vague, but I guess we can say this is a reinforcement learning problem and probably need to use some neural network to solve it.

What type of algorithm would you use to segment your customers into multiple groups?

Various clustering algorithm, like K Means Clustering, DBSCAN, Hierarchical Cluster Analysis (HCA), etc.

Would you frame the problem of spam detection as a supervised learning problem or an unsupervised learning problem?

I would frame it as a supervised learning problem, a classic spam classification problem. The tricky part here is what is the definition of spam, not easy to agree on something these days, you know.

What is an online learning system?

It means instead of learning from all the data in the dataset, it’s only looking at the new data coming in and draw generalizations from these relativly smaller updates of the whole dataset.

What is out-of-core learning?

Means the data is so big that it cannot fit in one machine main memory. Another way to call it is incremental learning, a version of online learning system. However as computer and storage is getting cheaper and more advanced, there are more way to get around this now.

What type of learning algorithm relies on a similarity measure to make predictions?

Algorithms that is in instance based model would relies on comparing examples that it possess and the new instance, hence the similarity measure. Algorithms like K-nearest Neibours (KNN) are an example of it.

What is the difference between a model parameter and a learning algorithm’s hyperparameter?

Model parameter is the features that you pass into the model, aka the input to the model. Hyperparameter is about the things to tweak for the algorithm. So we have:

Algorithm(Hyperparameter) + Data = Model

Input(Model parameter)) + Model = Output

What do model-based learning algorithms search for? What is the most common strategy they use to succeed? How do they make predictions?

It searches for the best model parameter so that the model can best generalize the widest range of data points and hence the model is more powerful. The common way is to try to use the training data to “fit” the model, and then use that model to do predictions by taking in new data points that the model have never seen, usually called testing data. If it can predict well too, then we have a good enough model.

Can you name four of the main challenges in Machine Learning?

Mainly either bad data or bad algorithms.

Bad data:

Not enough quality data (look at the industry that draws the boundaries of the lins on the road for self-driving cars)

Data is not representative enough (facial regonition that performs poorly for colored people)

Data quality is low (like missing data of a customer)

Bad algorithms:

Overfitting, means the model only works well with testing data but not real data

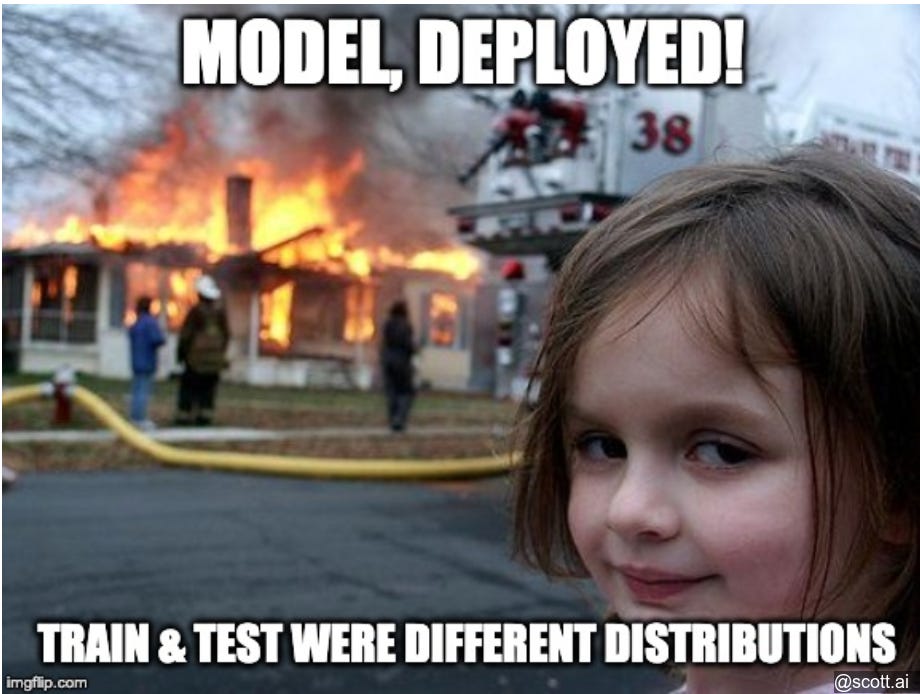

If your model performs great on the training data but generalizes poorly to new instances, what is happening? Can you name three possible solutions?

This is what overfitting looks like. It’s either the training data samples is very skwed/imbalanced, training data samples is not being properly shuffled (cross validation) or it just simply not enough testing data for the model to train.

What is a test set and why would you want to use it?

The test set is to make sure the model doesn’t overfit and to be able to construct the model with the relevant data points.

What is the purpose of a validation set?

By using a portion of the training set to compare the performace of different models as well as doing some fine tuning of the model.

What can go wrong if you tune hyperparameters using the test set?

It could overfit since it’s only looking at a specific set of data which is the training data when tuning the hyperparameters. Validation set should be used here instead.

What is repeated cross-validation and why would you prefer it to using a single validation set?

Cross-validation is always preferred as it can randomize the data to validate the model and hence can better show whether the models can generalize well on various different situations.

Hope you all enjoyed to FAQ here!